Insight Blog

Agility’s perspectives on transforming the employee's experience throughout remote transformation using connected enterprise tools.

29 minutes reading time

(5729 words)

AI Privacy and Security in Collaboration Tools: What’s Really Happening to Your Data?

AI privacy and security risks in collaboration tools explained. Learn how AI collects data, real privacy issues, laws, and how to protect your business.

AI didn't quietly slip into collaboration tools — it kicked the door in.

One minute your team was chatting, sharing files, and jumping on calls. The next, AI is summarising conversations, analysing sentiment, auto-tagging documents, and "helping" people work faster. On the surface, it looks like progress.

Underneath, it's a massive AI privacy problem most organisations didn't sign up for.

Modern collaboration platforms now see everything: private messages, shared files, meeting transcripts, voice calls, reactions, usage patterns, and metadata about who talks to whom and how often.

When AI is layered on top of that, the volume and sensitivity of data being processed explodes. This is where AI and data privacy stop being an IT concern and become a board-level risk.

The uncomfortable truth?

AI doesn't reduce risk — it expands the attack surface.

Every AI feature needs access.

Every access point is another place data can leak, be misused, retained too long, or analysed in ways users never expected. That's why artificial intelligence and data protection are now inseparable. You can't talk about one without the other.

What's changed recently is this: privacy failures are no longer theoretical.

We've seen real examples of confidential chats resurfacing, sensitive data being used for model training, and organisations discovering too late that their collaboration tools were collecting far more data than anyone realised.

Boards are paying attention because the fallout is serious — regulatory fines, legal exposure, reputational damage, and loss of trust from employees and customers.

Once trust is gone, no amount of AI productivity gains will save you.

AI in collaboration tools is here to stay. But pretending it's safe by default is how companies get burned.

Key Takeaways

- AI in collaboration tools expands privacy risk because it needs broad access to chats, files, meetings, and metadata to work.

- Most “does AI sell your data” confusion comes from vague wording; the real risk is reuse, retention, and training boundaries you can’t verify.

- Shadow AI is a major exposure source: employees using unapproved tools can leak sensitive data with a simple copy-and-paste.

- Privacy incidents often come from ordinary technical failures and misconfigurations, not dramatic hacking attempts.

- Compliance is the minimum bar; you still need clear controls: opt-out of training, audit logs for AI actions, role-based AI access, and an admin kill-switch.

Read this article: : Top 6 AI-Powered Project Management Tools To Use In 2023

How AI Actually Collects Personal Data (No Myths, Just Mechanics)

Let's strip away the marketing language and talk about how AI actually works inside collaboration tools.

Not theory. Not fear-mongering. Just mechanics.

User-Generated Content: The Obvious Stuff

The first layer is what everyone expects.

Messages, files, comments, reactions, meeting notes, voice transcripts — anything users actively create. This is the core answer to how does AI collect personal data.

When AI features are enabled, this content isn't just stored. It's:

- Parsed

- Indexed

- Analysed for patterns

- Sometimes summarised or re-used in different contexts

If employees discuss contracts, HR issues, medical information, or financial details, AI systems can see it all. Whether they should is a different question.

Metadata: The Data You Didn't Realise Mattered

This is where most people underestimate the risk.

Metadata includes:

- Who communicates with whom

- When messages are sent

- How often teams interact

- File access history

- Response times

- Device and location signals

Even without reading message content, metadata can reveal power structures, performance issues, internal conflicts, and sensitive relationships.

It's one of the most valuable — and least visible — forms of AI data collection.

Behavioural Data: How AI Learns "How You Work"

AI doesn't just look at what you say. It watches what you do.

This includes:

- Login frequency

- Time spent in tools

- Features used or ignored

- Engagement levels

- Writing style and tone

- Collaboration patterns

This is how AI systems generate insights like productivity scores, sentiment analysis, or "smart" recommendations.

It's also why ai data collection tools free often come with hidden costs. Behavioural data is incredibly valuable, and free tools usually extract more of it.

Training Data vs Inference Data: Where Most Confusion Happens

This is the part vendors rarely explain clearly.

- Inference data is what AI uses in real time to respond to users (for example, generating a summary or suggestion).

- Training data is what's retained and reused to improve the AI model over time.

If an AI system "gets better" the more you use it, it's learning from someone's data. The only question is whether that data stays isolated to your organisation or feeds into a broader system.

Some platforms separate customer data cleanly.

Others blur the lines. Many users never know which camp they're in.

AI doesn't magically become smarter. It learns by observing, analysing, and retaining data — often far more than users realise. If privacy controls aren't explicit, limited, and enforced, convenience quietly turns into exposure.

That's why understanding how AI collects personal data isn't optional anymore. It's the foundation for every privacy, security, and compliance decision that follows.

Real AI Privacy Issues: Examples That Actually Happened

This isn't hypothetical.

These AI privacy issues examples are drawn from real-world incidents organisations have already dealt with — often after the damage was done.

Internal Chats Exposed Across Tenants

In multi-tenant collaboration platforms, data isolation is everything.

When it fails, the consequences are severe.

There have been documented cases where internal chat messages or document previews were accidentally surfaced to users in other organisations due to misconfigured AI indexing or caching layers.

The AI wasn't "hacked" — it was simply given broader access than intended.

This is a textbook ai privacy example of what happens when AI systems are allowed to scan shared infrastructure without airtight boundaries.

AI Assistants Summarising Confidential Conversations

AI meeting assistants and chat summarisation tools are marketed as productivity boosters. In practice, they've created serious exposure.

Examples include:

- HR disciplinary conversations summarised and visible to unintended viewers

- Legal discussions condensed into searchable snippets

- Executive strategy meetings auto-tagged and indexed

Once summarised, sensitive conversations become easier to discover, not harder. That's a major shift in risk most teams never planned for.

Training Data Appearing in Unrelated Outputs

One of the most worrying ai privacy issues examples involves data resurfacing where it clearly doesn't belong.

Users have reported:

- AI-generated responses containing fragments of other organisations' data

- Internal phrasing or terminology leaking into unrelated outputs

- Sensitive examples appearing during "generic" AI queries

This usually points to blurred lines between customer data and model training data — a problem that's hard to detect and even harder to prove after the fact.

Admins Unable to Fully Delete Historical Data

Deletion is where many AI privacy promises quietly fall apart.

In several platforms:

- Deleted messages remained in AI indexes

- "Archived" content was still accessible to AI tools

- Data removal didn't propagate to backups or training datasets

From a compliance perspective, this is dangerous. From a trust perspective, it's fatal. If data can't be fully deleted, control is an illusion.

Why People Keep Asking About ChatGPT

Users regularly ask whether tools like ChatGPT retain, reuse, or expose their data.

That's not because it's uniquely risky — it's because it made AI mainstream and forced people to think about privacy for the first time.

The broader lesson is simple:

If employees are asking these questions about consumer AI, they're already worried about what's happening inside your enterprise tools.

The Pattern You Can't Ignore

Across all these ai privacy examples, the root cause is the same:

AI was added faster than governance, access control, and transparency.

In March 2023, ChatGPT experienced a widely reported privacy incident that became a turning point for AI trust.

A bug in an open-source library caused some users to briefly see the titles of other users' conversations, and in limited cases, partial chat content and billing metadata.

This was not a cyberattack, but a session isolation failure—the kind of technical issue that emerges when systems scale faster than their safeguards.

Although the exposure window was short, the impact was significant.

Privacy incidents, even temporary ones, carry serious consequences. IBM estimates the average global cost of a data breach at $4.45 million, largely driven by loss of trust and regulatory scrutiny.

The ChatGPT incident resonated because it violated a basic expectation: that private conversations remain private by default.

In response, OpenAI moved quickly.

Affected features were disabled, the bug was patched, and a transparent post-mortem was published.

More importantly, OpenAI introduced lasting changes, including clearer data-usage disclosures, options to disable chat history and exclude conversations from training, shorter retention windows, and stronger separation between inference and training data.

The broader lesson is clear. As Gartner predicts that over 80% of enterprises will use generative AI by 2026, even small privacy failures can have outsized consequences.

Most AI-related data exposures stem from configuration and governance gaps, not malicious attacks.

The ChatGPT incident didn't prove AI is unsafe—it proved that AI systems fail in ordinary technical ways, and without explicit controls, those failures can expose real data.

The issue isn't that AI exists. It's that organisations adopted it without fully understanding where data flows, how long it's retained, and who — or what — can see it later.

Does AI Sell Your Data? What's Fact, What's Fear, What's Marketing Spin

This is one of the most searched — and most misunderstood — questions around AI: does AI sell your data? If you've ever browsed Reddit threads asking "does ai sell your data reddit", you've seen the confusion firsthand.

The problem isn't paranoia. It's vague language from vendors.

First, let's get one thing straight:

"Sell," "share," "retain," and "train on" are not the same thing.

But many AI companies blur these terms on purpose.

- Sell usually means exchanging raw data for money. Most reputable AI vendors claim they don't do this.

- Share often means providing access to third parties, partners, subprocessors, or affiliates.

- Retain refers to how long your data is stored, indexed, or cached.

- Train on means using your data to improve AI models — sometimes permanently.

When companies say "we don't sell your data," they're often technically telling the truth while avoiding the harder questions.

Here's the reality: most AI platforms don't make money by selling raw data.

They monetize insights, patterns, and aggregated intelligence derived from that data.

Usage trends, behavioural signals, feature adoption, sentiment analysis — these are extremely valuable, especially at scale. Raw messages aren't the product. What can be learned from them is.

This distinction matters because from a privacy and compliance perspective, outcomes matter more than semantics.

Whether your data is sold, shared, or used to train models, it has still left your direct control.

Free AI tools are where the risk increases sharply. Free almost always means:

- Broader data collection

- Longer retention periods

- Fewer opt-out controls

- Vague language around "service improvement"

That's why the old saying holds true in AI: if the tool is free, you are the dataset. Convenience is subsidised by data extraction, not generosity.

The key takeaway isn't that all AI tools are untrustworthy.

It's that organisations need to stop asking "do they sell our data?" and start asking better questions:

- Is our data used for training?

- Can we opt out — contractually, not just in settings?

- How long is data retained after deletion?

- Who else can access it, human or machine?

Because in AI, the biggest risk usually isn't outright selling. It's quiet reuse under vague promises — and that's where most companies get caught off guard.

Does ChatGPT Keep Your Data Private? The Questions People Actually Ask

This section exists because people keep asking the same things — does ChatGPT keep your data private, does OpenAI sell your data, and does ChatGPT share your data with government. The volume of these questions isn't driven by paranoia. It's driven by uncertainty.

Let's start with the most important distinction most users never hear explained properly: retention policies are not the same as training policies.

Retention refers to how long conversations are stored for operational, safety, or legal reasons. Training refers to whether that data is used to improve AI models. A platform can retain data without training on it, and it can train on data without keeping it forever. Mixing those concepts is where confusion — and mistrust — begins.

For consumer users of ChatGPT, OpenAI has stated that conversations may be retained for a limited period and, unless settings are changed, may be reviewed to improve systems.

This is not unusual in AI, but it does mean privacy is conditional, not absolute. For enterprise customers, the rules are different. Enterprise versions are designed so customer data is not used for model training by default, comes with stricter retention controls, and offers stronger contractual guarantees. Same AI, very different risk profile.

On the question does OpenAI sell your data, the answer is no in the traditional sense. OpenAI does not claim to sell user conversations as a commodity.

But that doesn't mean data is irrelevant to the business model. Like most AI providers, value is derived from improving systems, detecting abuse, and refining performance — all of which depend on access to data in some form. The absence of "selling" does not equal zero exposure.

The most sensitive question is whether ChatGPT shares data with governments.

The reality is less dramatic than online forums suggest. Lawful access requests — such as court orders or legally binding requests — are not the same as routine sharing. All major tech platforms are subject to this, not just AI tools. There is no evidence of blanket or proactive sharing, but there is compliance with valid legal obligations. That distinction matters.

The bigger risk most organisations overlook isn't governments at all. It's vendors, subprocessors, contractors, and internal misconfigurations.

Data exposure is far more likely to occur through overly broad access permissions, third-party integrations, poor tenant isolation, or unclear retention rules than through any state-level request.

The bottom line is this: ChatGPT — like most AI systems — can be used privately, but it is not private by default in every context.

The real question businesses should be asking isn't "can we trust this AI?" but "do we understand the controls well enough to use it safely?" Because in AI, privacy failures usually come from assumptions, not malice.

Read this article: : Top 6 AI-Powered Project Management Tools To Use In 2023

AI Privacy Issues by the Numbers (Why This Isn't Overblown)

The Scale of AI-Related Data Exposure

AI privacy risk isn't theoretical anymore. Industry research shows that over 40% of organisations have already experienced AI-related data exposure.

This includes sensitive data being processed by AI tools without approval, improper handling of training data, and unauthorised use of external AI services.

Most of these incidents never make headlines because they don't fit the classic definition of a "data breach." But from a compliance, legal, and trust standpoint, the impact is the same — data left organisational control.

Shadow AI Is Now the Default, Not the Exception

One of the biggest contributors to AI privacy risk is shadow AI — AI tools used without IT or security approval.

Gartner estimates that by 2026, more than 80% of enterprises will be using generative AI, and a large portion of that usage will happen outside formal governance.

Employees routinely paste internal documents into public AI tools, use browser-based AI assistants to summarise customer data, or connect free AI tools to work accounts.

This isn't malicious behaviour. It's convenience-driven — and that's exactly why it's dangerous.

Human Behaviour Is the Primary Risk Factor

Despite common assumptions, attackers are not the biggest problem. People are.

Multiple studies show that 60–70% of data exposure incidents are caused by human behaviour, not external hacking.

AI makes this worse by lowering the barrier to misuse. What once required exporting files, downloading data, or bypassing controls now takes a single copy-and-paste into an AI prompt.

AI didn't create the human risk. It amplified it.

Unapproved AI Tool Usage Is Widespread

Security teams consistently report that one in three employees uses unapproved AI tools at work.

These tools typically lack:

- Enterprise-grade access controls

- Clear data retention limits

- Contractual guarantees around data usage

- Audit logs or deletion enforcement

Once data enters these systems, organisations often have no visibility — and no practical way to retrieve or delete it.

But do those numbers matter i hear you asking?

These statistics explain why AI privacy concerns aren't exaggerated. The threat model has changed. The biggest risk is no longer a sophisticated external attack — it's well-meaning employees using powerful AI tools without guardrails.

Until organisations address AI governance, visibility, and education, the numbers will keep moving in the wrong direction — and privacy failures will continue to look "unexpected" while being entirely predictable.

Why AI Struggles to Keep Information Secure

AI privacy failures aren't usually caused by bad intentions.

They happen because of how AI systems are designed, integrated, and rushed into production. If you understand the mechanics, the risk becomes obvious.

AI Needs Broad Access to Be "Helpful"

The core problem is simple: AI only works well when it can see everything.

To summarise conversations, detect sentiment, surface insights, or make recommendations, AI systems need access to:

- Messages and files

- Meeting transcripts

- User behaviour and activity data

- Historical content for context

The more useful the AI feature, the broader the access it requires.

From a security perspective, that's a nightmare. Wide access increases blast radius. One mistake doesn't expose one file — it exposes everything the AI can see.

This is the fundamental tension in AI privacy and security: usefulness and minimised access pull in opposite directions.

Over-Permissioned Integrations Are the Norm

Most AI tools are not standalone systems.

They're layered on top of:

- Chat platforms

- Document libraries

- Calendars

- CRMs

- HR systems

To "just work," AI integrations are often granted far more permissions than they actually need. Read access becomes read/write. Scoped access becomes global.

Temporary access becomes permanent.

Once those permissions are granted, they're rarely reviewed. Over time, AI systems quietly accumulate access across systems — creating a single point of failure that security teams didn't design intentionally.

Poor Tenant Isolation Still Exists

In theory, modern SaaS platforms isolate each customer cleanly. In practice, AI complicates this.

AI relies on shared infrastructure:

- Shared indexes

- Shared caches

- Shared inference pipelines

If tenant isolation is imperfect — even briefly — data can bleed across boundaries.

That's how private chats, document titles, or metadata end up visible to the wrong users. Not because someone was hacked, but because the AI layer crossed a line it wasn't meant to.

This is one of the most common answers to why does AI not keep information secure: isolation is harder at AI scale.

Lack of Granular Audit Logs Hides the Damage

Traditional systems log human actions well. AI actions? Not so much.

In many platforms, administrators can't clearly see:

- What data the AI accessed

- When it accessed it

- Why it accessed it

- What outputs were generated from it

Without granular audit logs, organisations can't investigate incidents properly — or even detect them in the first place. If you can't prove what the AI touched, you can't prove what was exposed.

That's not a security issue. That's a visibility failure.

AI security usually comes after the feature launch, not before it. Vendors race to ship AI capabilities because that's what the market rewards. Governance, permission models, auditability, and deletion logic follow later — if they follow at all.

That's the uncomfortable reality. AI doesn't struggle with security because it's careless. It struggles because security is treated as a retrofit, while AI is treated as a differentiator.

And in systems that process sensitive conversations, that order matters more than most organisations realise.

AI Privacy Laws: What You're Actually Required to Do

AI privacy laws aren't a grey area anymore.

If you're using AI inside collaboration tools, you already have legal obligations, whether you've thought about them or not.

The problem is that many organisations confuse compliance with safety. They're not the same thing.

GDPR and UK GDPR: Still the Foundation

In the UK and EU, GDPR and UK GDPR remain the primary legal frameworks governing AI and personal data.

AI systems are not exempt just because they're "automated" or "intelligent."

Under GDPR, AI-powered collaboration tools must still comply with:

- Lawful basis for processing personal data

- Transparency about how data is used

- Data minimisation and purpose limitation

- Security of processing

If your AI feature processes chat messages, files, voice transcripts, or behavioural data, it is processing personal data. There's no loophole for "experimental AI" or "productivity features."

Data Minimisation and Purpose Limitation

These two principles are where many AI deployments quietly fail.

- Data minimisation means collecting only the data strictly necessary for a defined purpose.

- Purpose limitation means using data only for the purpose originally stated.

AI struggles here because it's often deployed broadly "just in case" it's useful. If your collaboration tool collects full chat histories, metadata, and behavioural signals to power a feature that only needs summaries, you may already be over-collecting.

From a legal standpoint, "we might need it later" is not a valid justification.

The Right to Explanation (Where Applicable)

Under GDPR, individuals have the right to meaningful information about automated decision-making that significantly affects them. This is often referred to as the right to explanation.

In practice, this becomes relevant when AI is used for:

- Performance insights

- Sentiment analysis

- Engagement scoring

- Behavioural or productivity assessments

If AI outputs influence HR decisions, access levels, or employee evaluations, organisations may be required to explain:

- What data was used

- How the decision was made at a high level

- How individuals can challenge or contest outcomes

"Because the AI said so" is not a defensible position.

AI-Specific Regulations: What's Actually Coming

New AI regulations are emerging, but the direction is already clear — and not speculative.

Regulators are focusing on:

- Transparency of AI systems

- Risk classification of AI use cases

- Stronger obligations for high-risk AI (including workplace monitoring)

- Accountability for vendors and deployers

The key shift is this: responsibility is shared. You can't outsource compliance entirely to your software vendor. If you deploy AI internally, you own part of the risk.

Compliance does not mean your data is safe. It means you've met the minimum legal threshold.

AI systems can be fully GDPR-compliant and still:

- Over-collect data

- Retain it longer than users expect

- Expose it through misconfiguration

- Erode trust internally

Think of AI privacy laws as the floor, not the ceiling. If your strategy stops at "are we compliant?", you're already behind. The organisations that avoid AI privacy disasters go further — by design, not by regulation.

What to Look for in an AI-Safe Collaboration Tool

This is the point where theory meets buying decisions.

Once you accept that AI introduces real privacy and security risk, the question becomes practical: what should you demand from a collaboration tool before trusting it with sensitive data?

Use the checklist below as a hard filter — not a nice-to-have list.

Clear AI Privacy Policy (Not Buried)

If you have to dig through legal pages or vague blog posts to understand how AI handles your data, that's already a red flag.

An AI-safe collaboration tool should spell out, in plain language:

- What data the AI can access

- What data is retained

- What data is excluded

- How long data is kept

If the policy relies on phrases like "may be used" or "from time to time" without specifics, assume the widest possible interpretation — and the highest risk.

Opt-Out of Training on Customer Data

This is non-negotiable for serious organisations.

You should be able to contractually opt out of having your chats, files, meetings, or behavioural data used to train AI models.

Not hidden behind settings. Not "by request." Explicit, enforced, and documented.

If a vendor can't clearly say "your data is never used for training", then you have to assume it might be.

Data Residency Controls

Where your data lives still matters — especially with AI involved.

An AI-safe platform should offer:

- Clear data residency options

- Alignment with your regulatory environment

- Transparency about where AI processing occurs

If data is stored in one region but processed elsewhere "for performance reasons," you need to know that upfront. AI pipelines often cross borders quietly.

Full Audit Logs for AI Actions

Traditional audit logs track people. AI-safe tools also track machines.

You should be able to see:

- What data the

- When it accessed it

- Which feature triggered the access

- What output was generated

If you can't audit AI behaviour, you can't investigate incidents, prove compliance, or defend decisions. No logs means no accountability.

Role-Based AI Access

Not everyone needs AI access — and that's exactly the point.

A secure collaboration tool lets you:

- Limit AI features by role

- Restrict access by department or data type

- Prevent AI from touching sensitive spaces (HR, Legal, Finance)

Blanket AI access across the organisation is convenient, but it's also reckless. Least-privilege should apply to AI just as much as humans.

Admin Kill-Switch for AI Features

This is the ultimate control — and one many vendors avoid offering.

Administrators should be able to:

- Disable AI features instantly

- Pause AI processing during incidents

- Roll back AI access without waiting on vendor support

If something goes wrong, speed matters. An AI kill-switch turns a potential breach into a contained event instead of a drawn-out crisis.

An AI-safe collaboration tool doesn't rely on trust or promises.

It relies on controls. If a vendor can't meet most of the criteria above, the risk isn't theoretical — it's built into the product.

AI Privacy Policy Red Flags You Shouldn't Ignore

AI privacy policies are where risk is either made obvious — or carefully hidden.

Most organisations skim them once, tick a box, and move on.

That's a mistake. If you see any of the red flags below, you should assume maximum data exposure, not minimum.

"May Use Data to Improve Services" (Without Limits)

This is the most common — and most dangerous — phrase in any AI privacy policy.

On its own, it sounds harmless. In reality, it can mean:

- Using your data to train AI models

- Retaining data indefinitely

- Reusing data across customers or products

If "improve services" is not clearly defined, limited, and scoped, it gives the vendor broad freedom to reuse your data in ways you didn't explicitly agree to.

In AI terms, vague language equals broad permission.

No Defined Retention Period

If a privacy policy doesn't clearly state how long data is kept, that's a major warning sign.

Without a defined retention period:

- Data may live indefinitely in logs, indexes, or backups

- "Deleted" content may still exist in AI systems

- Compliance with deletion requests becomes questionable

Retention should be measured in days or months, not phrases like "as long as necessary". If the policy avoids specifics, assume long-term storage.

No Customer-Controlled Deletion

A strong AI privacy policy gives customers direct control over deletion.

Red flags include:

- Deletion "by request only"

- Deletion handled manually by support

- No mention of deletion from AI training or backups

If customers can't delete their own data — and confirm it's gone — then control sits with the vendor, not you. From a compliance and risk standpoint, that's unacceptable.

Vague References to "Trusted Partners"

This phrase appears in countless policies and explains almost nothing.

Ask yourself:

- Who are these partners?

- What data do they access?

- Why do they need it?

- Are they processors or independent controllers?

If the policy doesn't name categories, responsibilities, and safeguards, "trusted partners" becomes a catch-all for third-party access you can't see or audit.

If an AI privacy policy relies on vague wording, flexible interpretations, or undefined limits, it's not designed to protect you — it's designed to protect the vendor.

Clear policies reduce risk. Ambiguous policies shift it onto your organisation. When it comes to AI, that's not a legal nuance — it's a strategic decision you'll be held accountable for later.

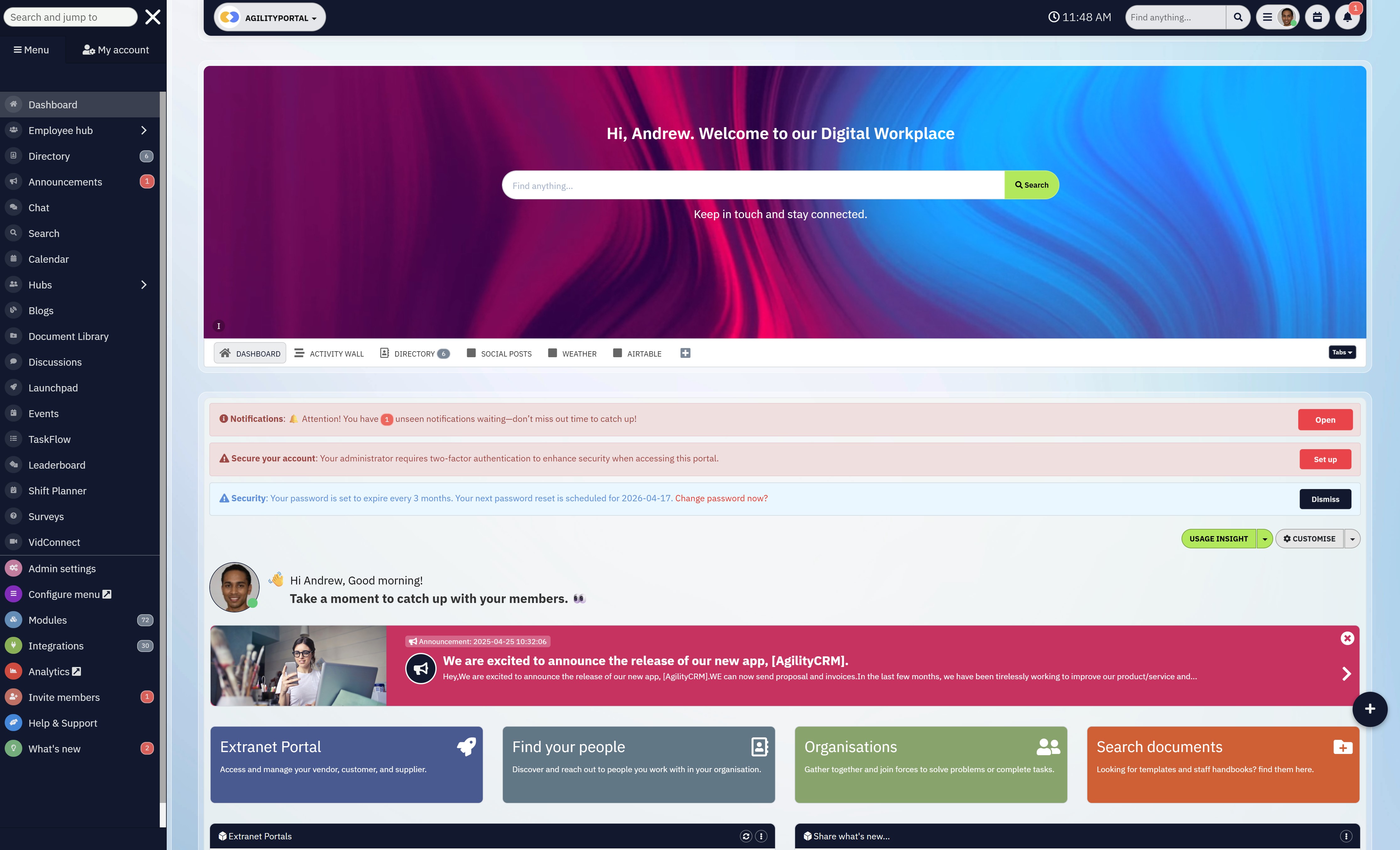

Why Security-First Teams Choose AgilityPortal

Most collaboration tools rushed AI into the product and figured out privacy later.

AgilityPortal did it the other way around.

AI is powerful — but only when it's controlled, auditable, and designed for real organisations, not data experiments.

AgilityPortal gives you modern collaboration without gambling your data. Chats, documents, video, projects, and portals all live in one secure workspace, with AI features that respect boundaries instead of crossing them.

What sets AgilityPortal apart

- AI that works inside your rules — not around them

- Clear data ownership (your data stays yours, full stop)

- No training on customer data — contractually enforced

- Role-based AI access for HR, Legal, Finance, and Exec teams

- Full audit trails so you know exactly what AI touched and when

- Admin controls to pause or disable AI instantly if needed

This isn't AI built for hype. It's AI built for trust, compliance, and long-term use.

If your team handles sensitive conversations, regulated data, or external partners, AgilityPortal lets you move fast without losing control. No shadow AI. No vague policies. No surprises six months later.

👉 Stop choosing between productivity and privacy.

Choose a collaboration platform designed for organisations that take both seriously.

Choose a collaboration platform designed for organisations that take both seriously.

Final Take: AI Isn't the Risk — Blind Adoption Is

AI in collaboration tools is no longer optional. It's already embedded in chat, documents, meetings, search, and analytics — whether organisations planned for it or not.

The real risk isn't AI itself. It's adopting AI without understanding what it touches, what it keeps, and who controls it.

Too many companies roll out AI features because competitors are doing it, not because they've assessed the data flows, permissions, retention policies, or failure modes. That's how privacy incidents happen — quietly, predictably, and after the fact. Not through malice, but through assumptions.

The safest organisations aren't anti-AI. They're deliberate. They ask hard questions before rollout, limit AI access by design, demand transparency from vendors, and treat AI like any other high-impact system — governed, auditable, and reversible.

AI will keep getting more powerful. That's not the problem.

Blind adoption is.

Categories

Blog

(2668)

Business Management

(326)

Employee Engagement

(213)

Digital Transformation

(178)

Growth

(122)

Intranets

(120)

Remote Work

(61)

Sales

(48)

Collaboration

(40)

Culture

(29)

Project management

(29)

Customer Experience

(26)

Knowledge Management

(21)

Leadership

(20)

Comparisons

(7)

News

(1)

Ready to learn more? 👍

One platform to optimize, manage and track all of your teams. Your new digital workplace is a click away. 🚀

Free for 14 days, no credit card required.