Insight Blog

Agility’s perspectives on transforming the employee's experience throughout remote transformation using connected enterprise tools.

8 minutes reading time

(1523 words)

How To Detect and Prevent Bots From Entering Your Website

Bot traffic is non-human traffic to a website. Abusive bot traffic can impact performance and hurt business objectives. Learn how to stop abusive bots.

Programs that interact with systems or users via the internet or another network are known as bots. We use bots to automate several tasks. For example, a chatbot is a type of bot that answers questions to user queries.

While bots are useful, there are plenty of malicious bots on the internet as well. You must protect your website from these harmful bots for security reasons. In this guide, we'll look at 9 ways to detect and prevent bots from entering your website.

9 ways to detect and prevent bots from accessing your website

Before we understand how to detect and prevent bots from accessing your website, let's understand the damage malicious bots can cause to your website.

a. Scraping content: Malicious bots can scrape a website's content, copying and republishing it on other sites without permission. It can lead to decreased traffic for the original website and potential legal issues.

b. Spamming comments: Spam bots can post spam comments on a website, which can be annoying for users and make it unlikely for them to consider your website a credible source of information.

c. DDoS attacks: Distributed denial-of-service (DDoS) attacks involve a large number of bots flooding a website with traffic, resulting in the site becoming unavailable to users. This can cause significant disruption and damage to a website's reputation.

d. Stealing sensitive information: Some malicious bots are designed to steal sensitive information, such as login credentials or credit card numbers. This can result in identity theft or financial loss for both the website and its users.

All website owners/managers should protect their websites from harmful bots.

In the following steps, we'll talk about both ways to detect bots and then prevent them from causing harm to your website.

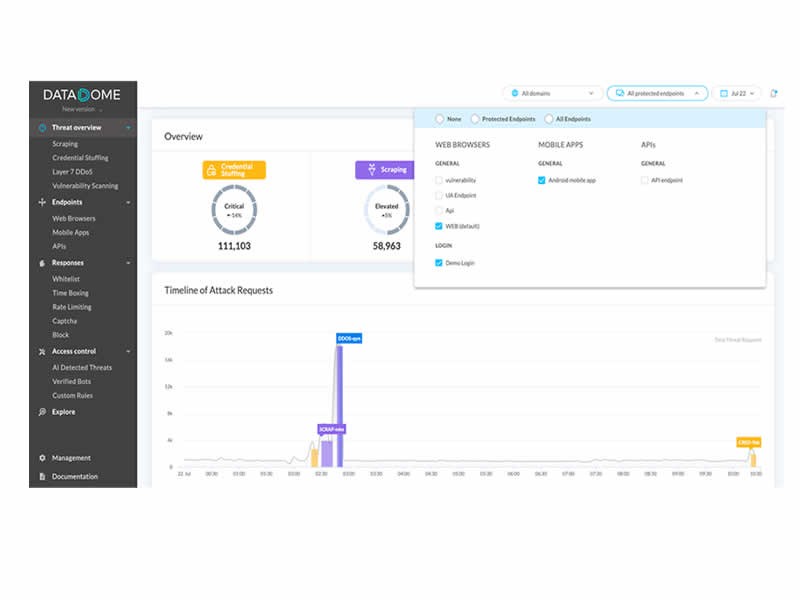

#1.Use a bot detection software

Bot detection software refers to dedicated tools that detect bots. Using bot detection software can help you identify and block harmful bots from accessing your website, protecting it from various types of attacks and potential damage.

Whether a bot detection software is necessary for your website depends on a number of factors, including the size and complexity of your site, the type of content you publish, and the potential risks your site faces. However, business websites must never compromise security and use bot detection tools.

With a bot detection tool, you don't have to worry about monitoring your website and removing bots manually. These SaaS tools do the work for you and give you and your customers a hassle-free experience.

#2.Check your server logs

Checking server logs is an effective way to detect bots on a website since they provide detailed information about the traffic coming to your site. You can identify patterns that may indicate bot activity by analyzing server logs.

For example, Bots may use unusual or suspicious IP addresses to access your website. You can identify potential bot activity by looking for these addresses in your server logs.

Moreover, bots exhibit different traffic patterns than human users. For example, they may visit a large number of pages in a short period or make requests at a much faster rate. You can identify anomalies that indicate bot activity by analyzing your server logs.

Make it a habit to monitor your server logs to detect bots on your website

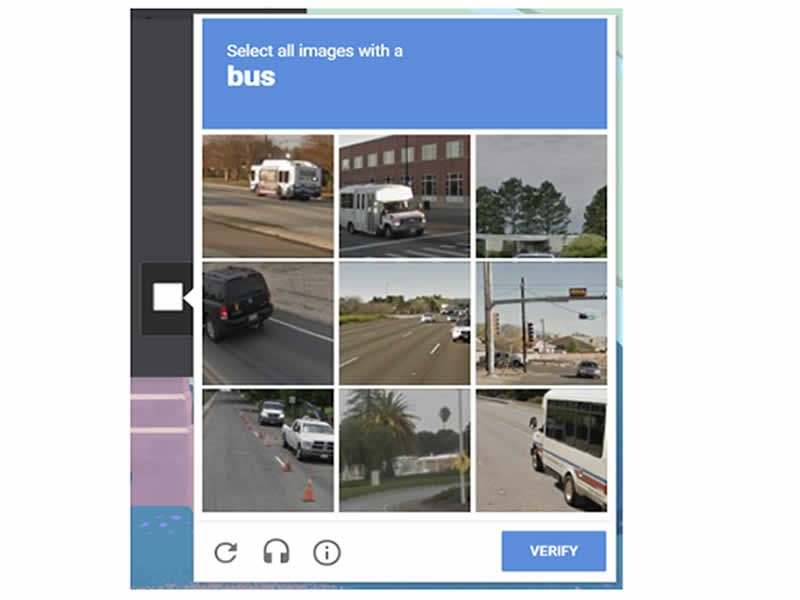

#3.Use CAPTCHAs

A CAPTCHA is a type of challenge-response test used in computing to determine whether the user is a human. That makes it an effective tool for detecting bots. You can use CAPTCHA codes to detect whether a website visitor is a human or a bot.

We can use CAPTCHAs to prevent automated bots from interacting with websites in ways that could be disruptive or malicious, such as filling out forms, clicking on links, or accessing restricted content. CAPTCHAs prevent these activities by presenting a challenge that is easy for humans to solve but difficult for bots to complete.

By using CAPTCHAs, websites can help ensure that their content is only accessed by humans and not by automated bots. Using CAPTCHAs correctly is the simplest way to prevent bots from entering your website.

#4.Check for bot-like behavior

Bot-like behavior refers to actions or activities typically associated with automated bots or programs rather than human users. Some examples of bot-like behavior might include:

- Rapidly clicking on links or filling out forms

- Accessing restricted content or features on a website

- Scraping data from websites

- Creating fake accounts or profiles

- Sending automated messages or spam

- Flooding a website with traffic in an attempt to disrupt service

Bots can perform these actions much faster and more accurately than humans, making them a useful tool for malicious actors who want to manipulate websites or gather information.

Checking your website traffic for bot-like behavior is an effective way to detect the presence of bots on your website.

#5.Keep track of traffic patterns

The traffic pattern of a website refers to how users interact with the site over a period of time. This can include the number of visitors to the site, the pages they visit, how long they stay on each page, and the actions they take while on the site. You can also use traffic pattern data to detect bots on your website.

Understanding the traffic pattern of a website can be helpful for other reasons as well. For example, it can help website owners and administrators to identify trends and patterns in user behavior and make informed decisions about optimizing and improving the site.

Several tools and services are available that can help website owners and administrators track and analyze traffic patterns. These tools can provide detailed reports and analytics on various aspects of a website's traffic, including page views, clicks, conversions, and more. You can use these tools to analyze traffic patterns on your site to detect malicious bots.

#6.Use rate limiting

Rate limiting is a technique used to control the rate at which a particular action or event can occur. For example, we use rate limiting to prevent abuse or excessive use of a service or resource, such as a website or an API (Application Programming Interface). It's also an effective way to prevent bots from accessing your website.

By limiting the number of actions that a particular IP address can make within a specific time, you can ensure that bots cannot flood the site with traffic or make excessive requests.

For example, you could set a rate limit of 10 requests per minute for a particular page. Then, if a bot tried to make more than 10 requests to that page within one minute, it would be blocked until the rate limit resets.

Rate limiting prevents bots from scraping content, creating fake accounts, or otherwise interacting with the site in disruptive ways. However, rate limiting alone is not enough for sophisticated bots.

#7.Add JavaScript challenges

A JavaScript challenge is a challenge-response test that uses JavaScript to determine whether the user on a website is a human. JavaScript challenges work by giving the user a task that requires them to execute a specific action or solve a problem using JavaScript.

For example, a JavaScript challenge might ask the user to click a particular button/link or to solve a simple math problem using JavaScript. Since JavaScript is a programming language typically only understood by humans, automated bots generally cannot complete these challenges.

#8.Ensure SSL encryption and secure passwords

Secure Sockets Layer (SSL) encryption is necessary to prevent bots on websites because it helps secure the connection between the browser and the website. This protects your website against interception and tampering by malicious third parties.

On the other hand, using secure passwords is important to prevent bots because strong passwords protect against unauthorized access to your accounts and personal information. It is common practice for malicious actors to attempt to guess or crack passwords using bots. Using strong and unique passwords can make it much more difficult for bots to crack your password successfully.

Make sure you use SSL encryption and secure passwords to protect your websites from bots.

#9.Check for vulnerable APIs

Attackers can exploit APIs with vulnerabilities to gain unauthorized access to data or systems. Having vulnerable APIs on your website threatens its security and makes it much easier for bots to infiltrate. That makes it essential to check for vulnerable APIs.

Here's what you can do to check for vulnerable APIs:

- Perform a security assessment

- Use an API security scanner

- Review documentation and source code

- Monitor API usage

Identifying potential vulnerabilities in your APIs at an early stage keeps your website secure from malicious bots.

Protect your business and customers with bot detection and removal

The primary challenge is that bots become even more powerful with the consistent efforts of cybercriminals who develop and operate them. Business websites must go the extra mile to ensure complete security for their customers. One of the several ways to do this is by implementing bot detection and prevention systems.

Follow these tips and suggestions to keep your website, business, and customers safe.

Categories

Blog

(2698)

Business Management

(331)

Employee Engagement

(213)

Digital Transformation

(182)

Growth

(122)

Intranets

(120)

Remote Work

(61)

Sales

(48)

Collaboration

(41)

Culture

(29)

Project management

(29)

Customer Experience

(26)

Knowledge Management

(21)

Leadership

(20)

Comparisons

(8)

News

(1)

Ready to learn more? 👍

One platform to optimize, manage and track all of your teams. Your new digital workplace is a click away. 🚀

Free for 14 days, no credit card required.